Skip Navigation

Snowflake is a great data warehousing tool that makes it easy to scale into the cloud. But if you’re using Snowflake, you know there can be some challenges with getting to a smooth, easy data ingestion process.

Not only can these challenges be costly in terms of time and resources, but they can end up leading to poor data quality that can negatively impact business decisions – and ultimately, customer experience. If left alone, bad data leads to harmful impacts on revenue.

Many data ingestion methods just don’t cut it

Often, Stitch customers come to us having experienced a variety of data ingestion methods, such as:

- Bulk loading large amounts of data using SQL in SnowSQL

- Uploading copied datasets using Snowpipe

- Loading small, flat source files using the Snowflake Web Interface

- Loading data from Amazon S3, Google Cloud Storage, or Microsoft Azure

These methods require hand-coding to import data into Snowflake, as well as knowledge of how to write SQL for SnowSQL and Web Interface, or Java or Python for Snowpipe. You also need a database administrator (DBA) and a skilled Snowflake user to interface with these options. Web Interface also limits file sizes to 50MB. The last option often requires a second data warehouse to store data and coding to connect the two databases.

While these methods have their place, none of them solve for the common obstacles most will run into once the data import process begins. Each leaves room for errors and takes data prep time (extracting, transforming, and data storage) outside of Snowflake in a pipeline that can easily break down.

With Stitch, you only need to know the credentials of the platform you want to export data from, and you do not need to have DBA-level knowledge to load data into Snowflake.

Four data ingestion challenges with Snowflake that give engineers headaches

At Stitch, we hear some common pain points from engineers working with Snowflake in their businesses. They tell us they are tired of:

- Wasting engineering time building custom solutions for each partner they need data from

- Dumping every data point into Snowflake — eating up data storage — only to use 10% of the fields in their database

- Bad data quality because the current ETL process requires constant maintenance

- Troubleshooting errors around why data isn’t properly loading

These four pain points are barriers to data ingestion, but they are easily addressed with Stitch:

- Instead of wasting engineers’ time, Stitch enables you to benefit from pre-built data pipelines —giving your engineers time to work on real business initiatives

- Instead of dumping every data point, Stitch lets you import only the data you need

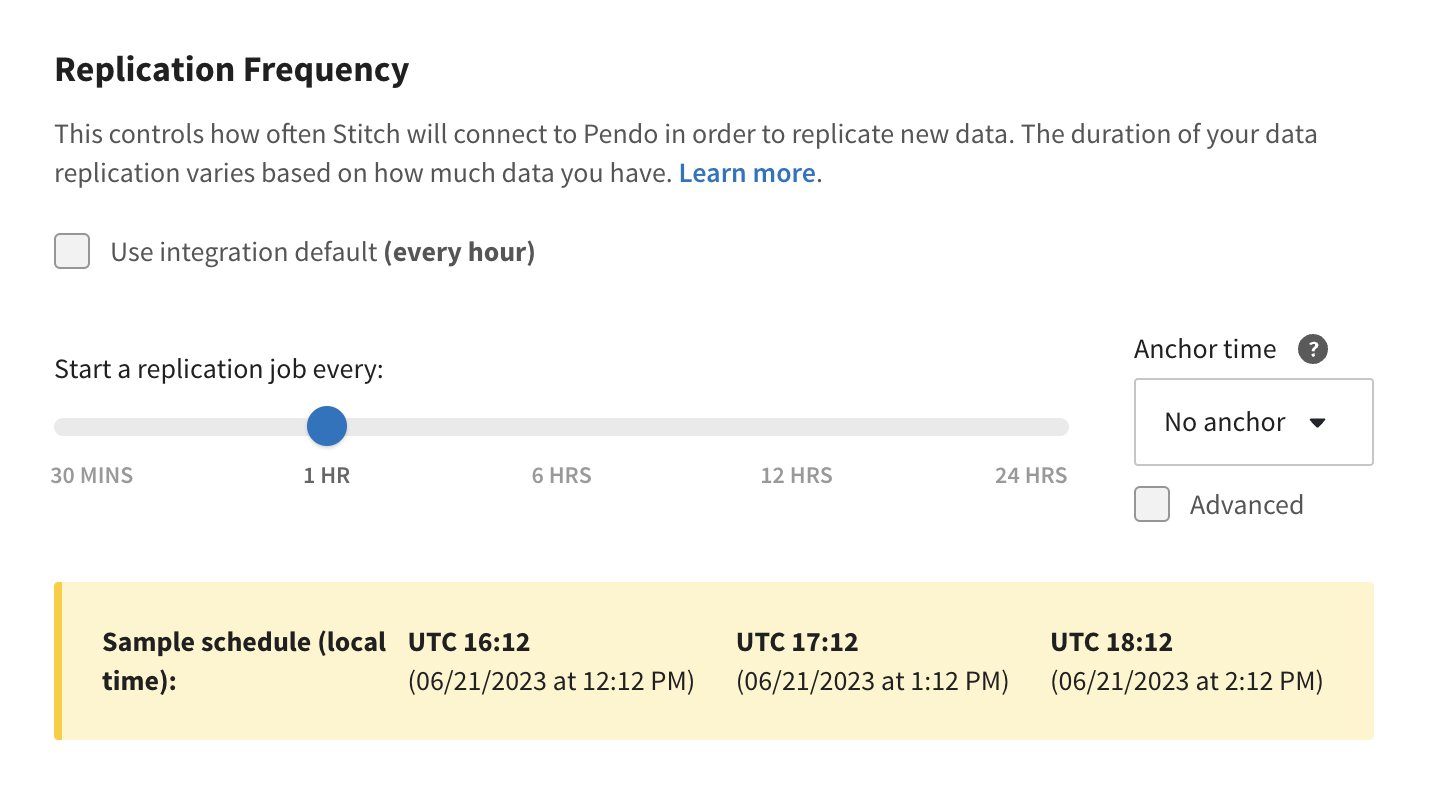

- Instead of worrying about bad data quality, Stitch can run scheduled data exports that consistently refresh data however often you need it, and catch data issues before they enter Snowflake

- Instead of troubleshooting errors, Stitch gives you a platform to rely on that is constantly addressing integration and API changes on your behalf to catch issues before they reach the end user

Stitch makes data ingestion into Snowflake easy

Stitch is an enterprise-grade, secure, ETL platform that connects to 140+ of your favorite data sources, enabling you to create zero-maintenance cloud data pipelines in minutes. You can rapidly move data from your sources to Snowflake in just a few clicks, with no IT expertise required. It saves engineers’ time, creates a single source of truth for the business, and is automated so that pipelines automatically and continuously update.

Ready to get started with Stitch for Snowflake? View our set-up video

We make getting started with Stitch easy. In this video, you’ll learn how to get connected to a free trial of Stitch directly from the Snowflake Partner Connect portal. Watch now to learn how Stitch can make data ingestion into Snowflake hassle- (and headache!) free.

Don't have admin access to your Snowflake account? Or prefer to connect to another database and still want a free 14-day trial of Stitch? Sign up here and connect to destinations like Snowflake, Amazon Redshift, Google BigQuery, Amazon S3, and more.

Give Stitch a try, on us

Stitch streams all of your data directly to your analytics warehouse.

Set up in minutesUnlimited data volume during trial